Artificial intelligence (AI) has already made its way into medical clinics, assisting in analyzing imaging data such as X-rays and scans. However, with the rapid development of sophisticated large-language AI models, discussions are now expanding into whether AI should be used in end-of-life medical decisions.

Rebecca Weintraub Brendel, director of the Harvard Medical School Center for Bioethics, recently spoke with the Harvard Gazette to explore the complexities of AI in making such critical decisions and the ethical implications that arise from it.

The Key Considerations in AI-Assisted End-of-Life Decisions

Brendel emphasizes that end-of-life decision-making, like all medical decision-making, is fundamentally about respecting a patient’s wishes—provided they are competent and their choices are not medically contraindicated. However, challenges arise when patients are incapacitated and unable to communicate their preferences.

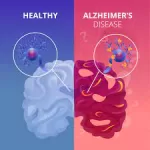

Another significant consideration is how patients process their decisions both cognitively and emotionally. While some may express a desire never to live with certain disabilities, real-world experiences show that people can adapt and often report improved quality of life even after severe injuries or chronic illnesses.

AI’s Role in Prognostic Assessments

Brendel acknowledges that AI has the potential to be a valuable tool in gathering and processing vast amounts of data, which could help provide clearer prognostic insights. This could be particularly useful when patients are making decisions about aggressive treatments versus palliative care. AI can assist in predicting potential outcomes without the biases that human decision-making may carry.

However, she warns against allowing AI to make final determinations in life-or-death matters. While AI can provide probability assessments—such as a 5% chance of survival—it cannot account for the deeply personal and contextual factors that shape human choices. AI should remain a support system rather than the ultimate decision-maker.

Ethical and Humanitarian Considerations

Brendel stresses the need for humility in medical decisions, particularly when dealing with patients who lack advance directives or family involvement. In such cases, medical teams often have to make informed assumptions, and AI could help by offering more precise prognostic data. Still, the human element of respect, empathy, and moral responsibility must remain central.

She also highlights concerns about how AI might reshape the role of healthcare professionals. If AI becomes superior in diagnostics and prognostics, what will remain the defining function of doctors? Brendel believes that human interaction, bedside manner, and moral leadership will continue to be irreplaceable elements of medical care.

AI, Global Health, and Justice

AI’s integration into healthcare raises larger societal questions about equity and justice. In many parts of the world, access to quality healthcare is limited. AI could provide much-needed assistance where human doctors are scarce, but this must be done with a commitment to ethical and just healthcare distribution.

As AI continues to evolve, bioethicists, healthcare professionals, and policymakers must ensure that its implementation aligns with human values. The challenge is not only technical but also deeply moral: how do we use AI to enhance care while preserving dignity and respect for human life?

Disclaimer:

This article is based on insights provided by Harvard Medical School and does not constitute medical or legal advice. AI in healthcare remains an evolving field, and ethical considerations should be carefully weighed by healthcare professionals and policymakers.