In a groundbreaking study, researchers have, for the first time, integrated artificial intelligence (AI) techniques with simultaneous dual-brain neuroimaging to explore how human interactions influence brain activity. This innovative approach bridges the gap between controlled laboratory settings and real-life communication, offering new insights into the neural synchrony that occurs during interpersonal exchanges.

The study, titled “Emotional Content and Semantic Structure of Dialogues are Associated with Interpersonal Neural Synchrony in the Prefrontal Cortex,” has been published in the journal NeuroImage. It was authored by Alessandro Carollo, Gianluca Esposito, Massimo Stella, and Andrea Bizzego from the University of Trento, in collaboration with Mengyu Lim of Nanyang Technological University in Singapore.

Decoding the Brain’s Response to Interaction

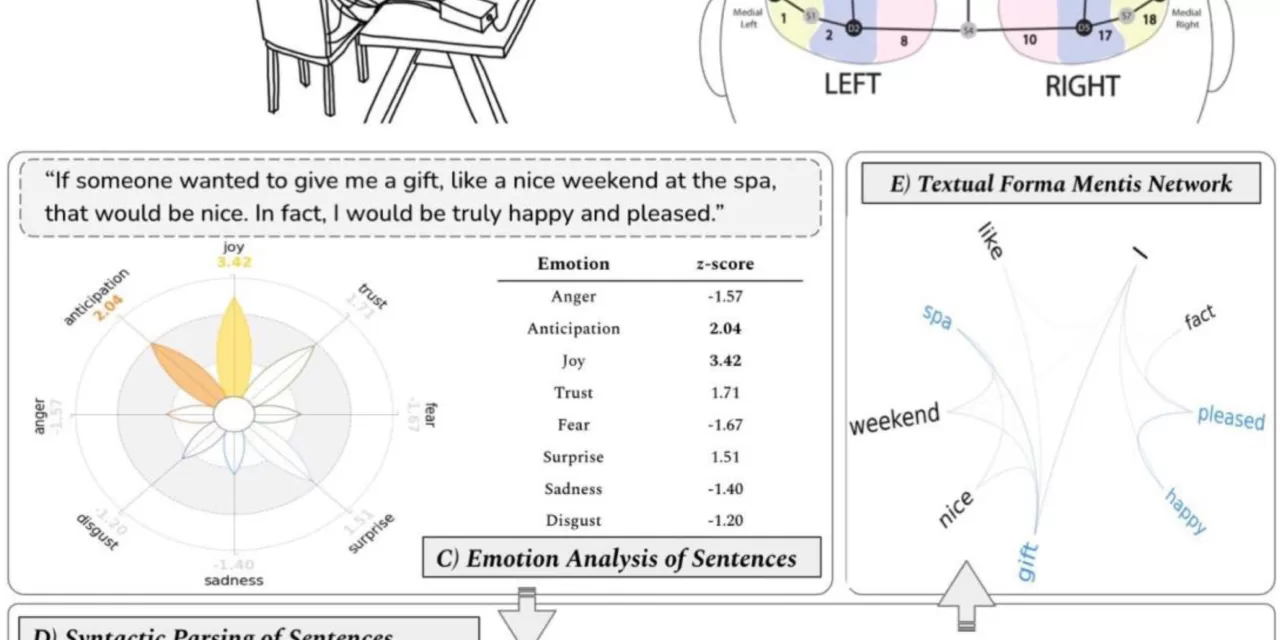

The research aimed to understand the neural mechanisms underlying social interactions, such as conversations, gift exchanges, and cooperative tasks. Using advanced AI-driven analysis and functional near-infrared spectroscopy (fNIRS), the team examined how emotions and language structure affect brain activity in pairs of participants.

“For the first time, we have combined AI techniques with neuroimaging measurements obtained on two people at the same time,” stated Carollo, the study’s lead author. “We worked in a laboratory setting but allowed participants more freedom in their dialogues and interactions to mimic real-life scenarios more closely.”

The study involved 42 pairs (84 individuals) aged between 18 and 35. Conversations were manually transcribed and analyzed using AI algorithms to assess emotional and syntactic/semantic features. Meanwhile, fNIRS technology, which uses light absorption to measure brain activity, provided real-time data on how these interactions influenced neural synchronization in the prefrontal cortex.

Bringing Research into Real Life

Unlike traditional neuroimaging techniques such as magnetic resonance imaging (MRI), fNIRS is non-invasive, lightweight, and portable. Carollo emphasized its practicality: “It only requires a small device, a pair of caps with cables, and a laptop, making it ideal for studying human interactions outside the laboratory.”

Esposito highlighted the study’s broader implications: “Our findings suggest that emotional content and language structure directly influence neural synchronization during interactions. This opens up exciting possibilities for studying parent-child bonding, romantic relationships, friendships, and even brief social encounters between strangers.”

By integrating interdisciplinary methods, the researchers hope their work will pave the way for future studies into social cognition and the neurobiological foundations of human interaction.

Disclaimer

This study is based on controlled experimental conditions and should not be interpreted as providing definitive conclusions about all aspects of human interaction. Further research is needed to validate findings across diverse populations and settings. The use of AI in neuroimaging is an evolving field, and results should be viewed in the context of ongoing scientific exploration.