DAVIS, CA – Researchers at the University of California, Davis, are pioneering a neuroengineering approach to restore lost voices, leveraging technology initially developed for robotic limb control and gesture recognition. The project aims to give individuals who have lost their ability to speak due to medical conditions, such as head and neck cancer, their unique, original voices back.

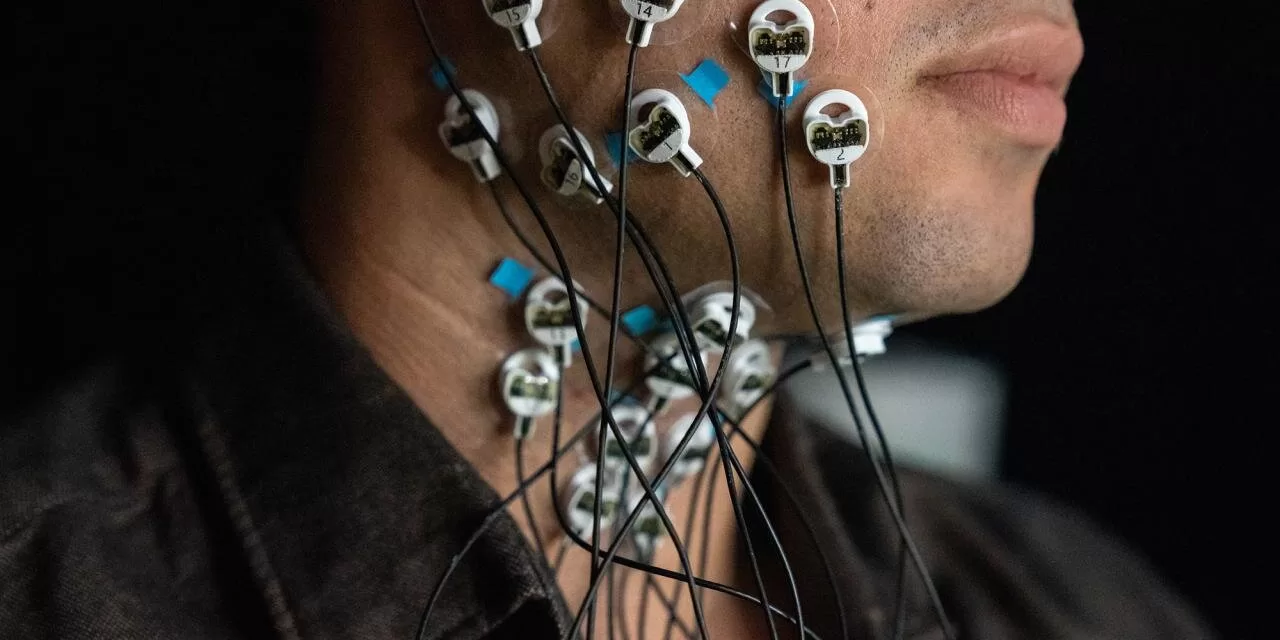

Lee Miller, a professor of neurobiology, physiology and behavior, and his team are adapting electromyographic (EMG) signal decoding, a technique used to interpret muscle contractions for robotic control and computer interaction, to analyze facial and mouth muscle movements during speech.

“Our voice is so important to our sense of identity and empowerment,” said Miller, highlighting the profound impact of voice loss on individuals.

The research builds upon Miller’s earlier work with Meta, published in the Journal of Neural Engineering (2024), which demonstrated the ability to use EMG signals to recognize and interpret gestures for natural computer interaction. The team tackled the challenge of varying EMG signals across individuals by focusing on simplified signal relationships among electrode pairs, creating a “gesture decoder that works for everybody.”

Applying this technology to speech, Miller and his colleagues, including graduate student Harsha Gowda, began working with healthy volunteers. They used EMG electrodes to record facial muscle movements during speech, training a computer to match EMG patterns with corresponding speech sounds, thereby creating tailored, computer-generated speech.

“We don’t need that much data to clone the person’s voice,” Miller explained, noting that approximately five minutes of speech and EMG data are sufficient.

The team is now working to restore the voices of individuals who have undergone laryngectomy, using existing voice recordings from family videos or audio diaries, paired with EMG and video data of their silent speech. This approach allows them to digitally recreate the individual’s unique voice.

The ultimate goal is to develop a smartphone-based system that allows individuals to silently speak into their phones, which would then translate their facial muscle movements into natural-sounding speech.

“Ultimately, we want this to work easily for anybody,” Miller said, acknowledging that further research and development are needed to make the system widely accessible.

The study, titled “Topology of surface electromyogram signals: hand gesture decoding on Riemannian manifolds,” was authored by Harshavardhana T Gowda et al. and published in the Journal of Neural Engineering (2024). DOI: 10.1088/1741-2552/ad5107.

Disclaimer: This article is based on information provided in the referenced research paper. While the findings are promising, it is important to note that this technology is still under development and further research, including clinical trials, is required before it can be widely implemented. The information provided in this article should not be interpreted as medical advice. Always consult with a healthcare professional before making any decisions related to your health or treatment.