A groundbreaking study published in the Proceedings of the National Academy of Sciences has provided new insights into how the human brain processes feelings about the world around it. The research, co-authored by Edward A. Vessel, the Eugene Surowitz Assistant Professor of Computational Cognitive Neuroscience at the City University of New York Colin Powell School for Civic and Global Leadership, explores the intricate relationship between perception and emotional responses.

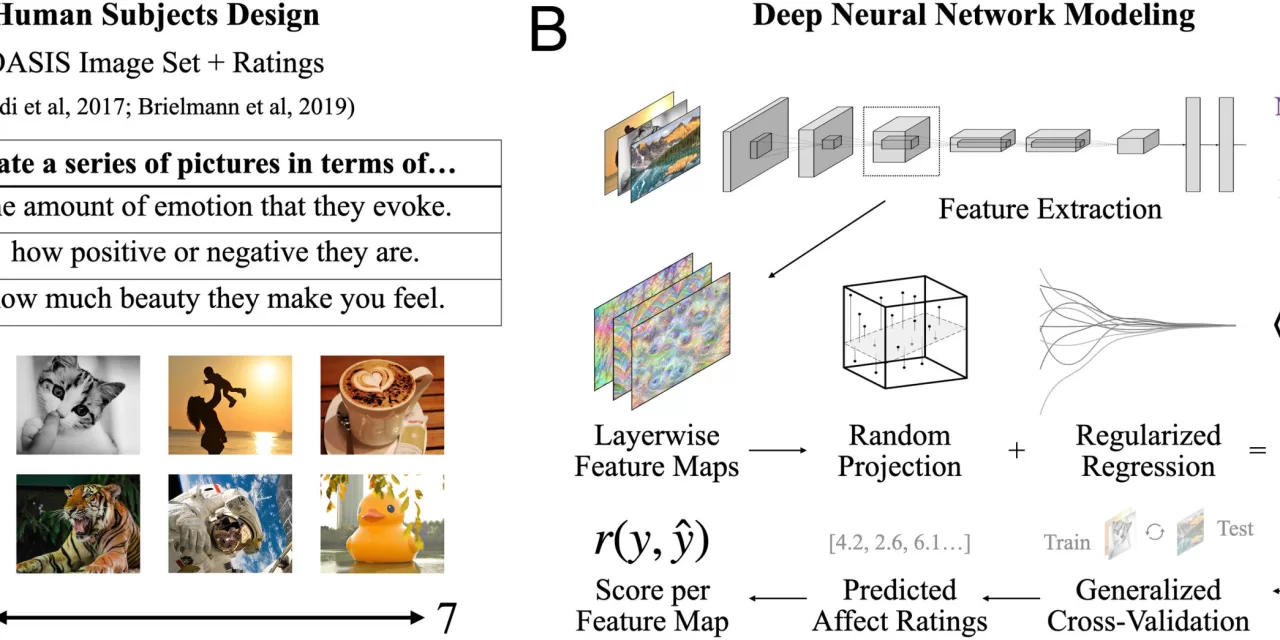

Titled “The Perceptual Primacy of Feeling: Affectless machine vision models explain a majority of variance in human visually evoked affect,” the study aimed to decipher how perceptual processes influence emotional experiences. The researchers leveraged machine learning models designed to categorize image content—termed “visual machines”—which perceive visual stimuli but lack the ability to feel emotions.

“These systems are tuned to understand the world by mimicking human perception,” explained Vessel. “They are good at perception because they serve as strong models for how our brains take in information.”

The study suggests that when people observe an object, their brain compares it to their extensive internal knowledge base, built over a lifetime of visual experiences. Factors such as familiarity, uniqueness, and comprehension contribute to how the brain evaluates what is seen. Since direct measurement of this internal knowledge is not feasible, the researchers used machine learning as a proxy.

Their findings indicate that perceptual processes alone can predict a significant portion of human affective responses, including judgments on beauty, positivity, negativity, and arousal.

“A lot of affect may be less ‘emotional’ than we think it is,” Vessel noted, emphasizing that sensory experiences might play a more substantial role in shaping our feelings than many psychological theories suggest.

The implications of these findings extend beyond neuroscience, potentially influencing the development of artificial intelligence and machine learning. By understanding how perceptual processes shape emotions, future AI systems might be designed to interpret human affect with greater accuracy.

For further details, refer to the study: Colin Conwell et al., The Perceptual Primacy of Feeling: Affectless Visual Machines Explain a Majority of Variance in Human Visually Evoked Affect, Proceedings of the National Academy of Sciences (2025). DOI: 10.1073/pnas.2306025121.

Disclaimer: This article is for informational purposes only and does not constitute medical or psychological advice. Readers should consult relevant professionals for guidance on cognitive neuroscience and its applications.