A groundbreaking set of guidelines, known as FUTURE-AI, has been unveiled, aiming to establish a robust framework for developing and deploying trustworthy artificial intelligence (AI) systems within healthcare. This initiative, a first of its kind, provides comprehensive recommendations spanning the entire lifecycle of medical AI, from its initial design and development to its validation, regulation, deployment, and ongoing monitoring.

The rapid advancement of AI in healthcare has yielded promising results in areas such as disease diagnosis and treatment outcome prediction. However, widespread adoption has been hindered by concerns regarding trust, safety, and ethical considerations. Existing research has highlighted potential pitfalls, including errors leading to patient harm, inherent biases exacerbating health inequalities, a lack of transparency and accountability, and vulnerabilities to data privacy and security breaches.

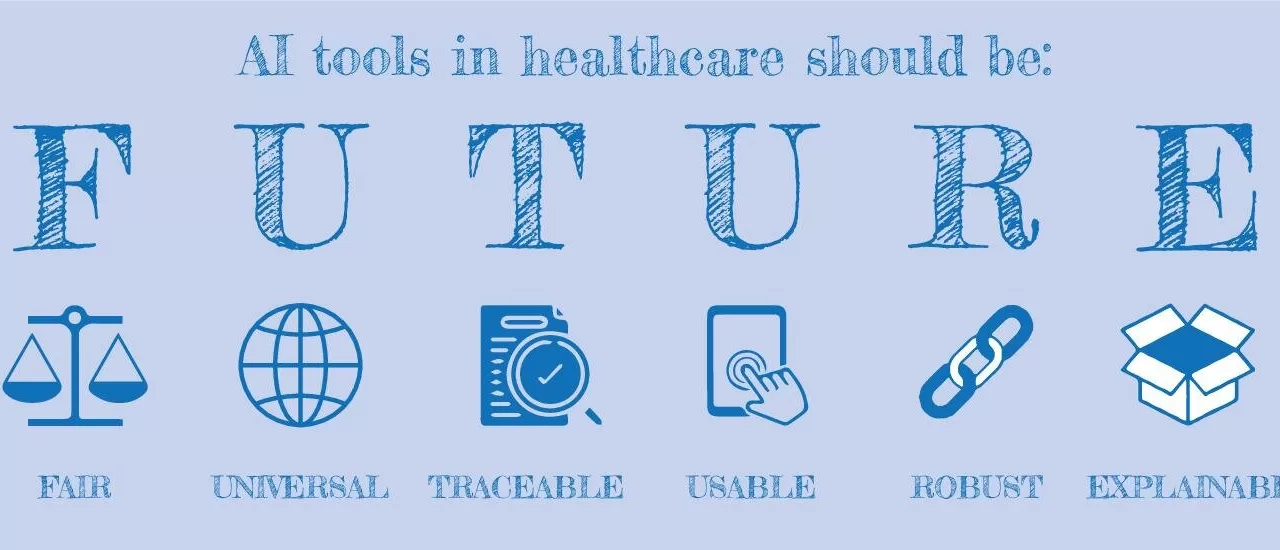

To address these critical challenges, the FUTURE-AI Consortium, an international collaboration of 117 experts from 50 countries, has developed a comprehensive set of guidelines published in the British Medical Journal (BMJ). These guidelines provide a roadmap for creating responsible and reliable AI tools for healthcare, built upon six fundamental principles:

- Fairness: Ensuring equitable treatment for all patients, free from bias.

- Universality: Promoting applicability across diverse healthcare contexts and populations.

- Traceability: Enabling the tracking of AI decision-making processes.

- Usability: Designing user-friendly tools for both healthcare professionals and patients.

- Robustness: Ensuring reliable performance under varying conditions.

- Explainability: Providing clear explanations of AI conclusions to patients and clinicians.

“These guidelines fill an important gap in the field of healthcare AI to give clinicians, patients, and health authorities the confidence to adopt AI tools knowing they are technically sound, clinically safe, and ethically aligned,” stated Gary Collins, Professor of Medical Statistics at the Nuffield Department of Orthopaedics, Rheumatology and Musculoskeletal Sciences (NDORMS), University of Oxford, and author of FUTURE-AI. “The FUTURE-AI framework is designed to evolve over time, adapting to new technologies, challenges, and stakeholder feedback. This dynamic approach ensures the guidelines remain relevant and useful as the field of health care AI continues to rapidly advance.”

The FUTURE-AI guidelines represent a significant step towards fostering trust and responsible innovation in the rapidly evolving landscape of AI in healthcare.

More information: Karim Lekadir et al, FUTURE-AI: international consensus guideline for trustworthy and deployable artificial intelligence in healthcare, BMJ (2025). DOI: 10.1136/bmj-2024-081554

Journal information: British Medical Journal (BMJ)

Disclaimer: This news article is based on information provided and should not be considered medical or professional advice. The application of AI in healthcare is a complex and evolving field. Readers should consult with qualified healthcare professionals for any health concerns or before making decisions related to their health or treatment. The effectiveness and safety of AI tools in healthcare may vary, and the guidelines provided are intended to promote responsible development and deployment, not guarantee specific outcomes.