In the world of auditory processing, timing is everything. New research from MIT’s McGovern Institute for Brain Research shows that the precise timing of neural spikes in response to sound waves is crucial for how we interpret auditory information in real-world scenarios. From recognizing voices to localizing sounds, the ability to process sounds with high temporal accuracy is central to many of the auditory tasks we perform daily.

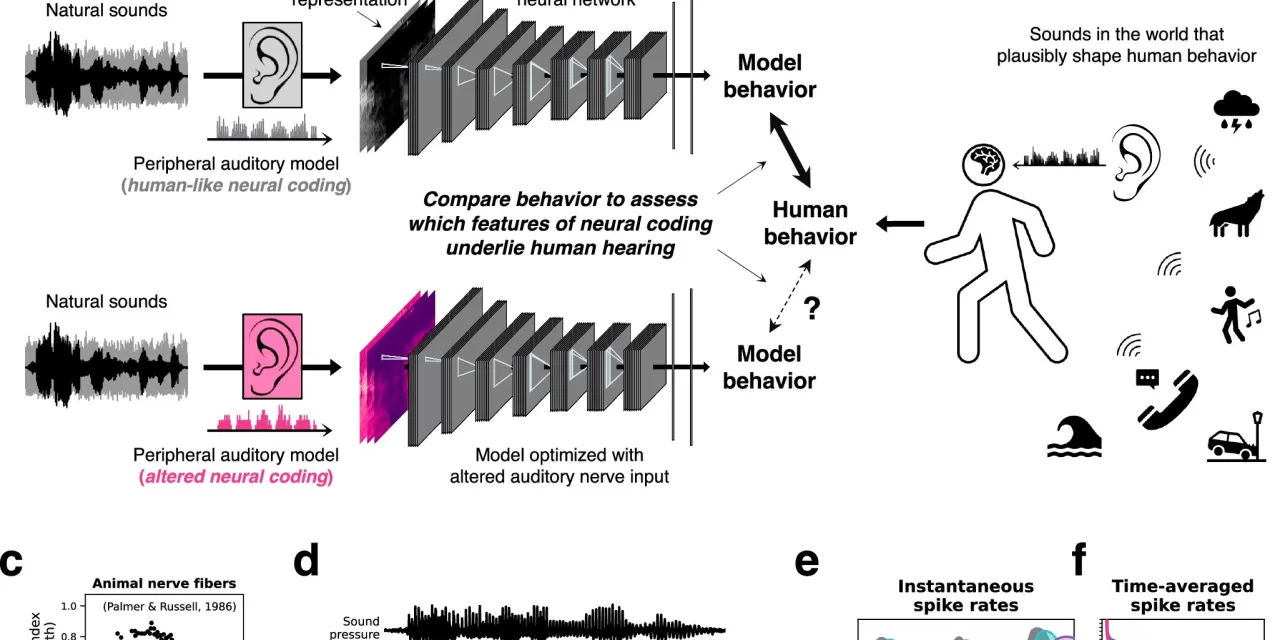

The study, led by MIT Professor Josh McDermott and graduate student Mark Saddler, uses advanced machine learning models to explore the intricacies of human hearing. Published in Nature Communications, the research reveals that neural spikes, which are rapid electrical pulses in auditory neurons, must be timed with extreme precision to match the frequency of sound waves. This timing, known as phase-locking, allows the brain to decode complex auditory signals.

“When sound waves reach the ear, the neurons fire in sync with the peaks of the sound’s oscillations. This allows us to distinguish between different pitches, recognize familiar voices, and even pinpoint the direction of sounds in noisy environments,” explained McDermott, who is also a professor in MIT’s Department of Brain and Cognitive Sciences.

The study’s findings indicate that even minor disruptions in spike timing can impair these vital auditory functions. In particular, the researchers used artificial neural networks to simulate the auditory processing system. The model was trained on real-world auditory tasks like recognizing words and voices amidst background noise, simulating the challenges humans face in everyday environments. When the timing of neural spikes was altered, the model’s ability to recognize sounds diminished, mimicking the challenges faced by individuals with hearing impairments.

McDermott’s team discovered that understanding this precise timing could be key to improving treatments for hearing loss, such as cochlear implants and hearing aids. “Current hearing technologies, including cochlear implants, are limited in certain ways. By refining these devices to better replicate the temporal precision found in natural hearing, we could greatly enhance their effectiveness,” said McDermott.

The research also holds promise for advancing the diagnosis of hearing loss. With the ability to simulate different types of auditory impairments using machine learning models, scientists could better understand the specific aspects of hearing that are affected and develop targeted therapies.

Ultimately, this groundbreaking work bridges the gap between neuroscience and artificial intelligence, offering a more nuanced understanding of how our brains process sound and opening new avenues for improving hearing-related technologies.

For more details, refer to the study: Mark R. Saddler et al., “Models optimized for real-world tasks reveal the task-dependent necessity of precise temporal coding in hearing,” Nature Communications (2024). DOI: 10.1038/s41467-024-54700-5.