The rapid advancement of generative artificial intelligence (AI) and deepfake technology is fueling a surge in health misinformation, leaving Australians vulnerable to scams and potentially harmful advice. Experts are urging the public to be vigilant and learn how to spot manipulated content.

False and misleading health information is proliferating online and on social media, with deepfakes being used to create realistic videos, photos, and audio of respected health professionals. These manipulated materials are often used to endorse fake health-care products or trick individuals into sharing sensitive health information.

The problem is compounded by the increasing reliance on online health information. In 2021, three in four Australians over 18 accessed health services online, and a 2023 study revealed that 82% of Australian parents consult social media for health-related issues. This reliance, coupled with the exponential growth of health-related misinformation and disinformation, creates a fertile ground for scams.

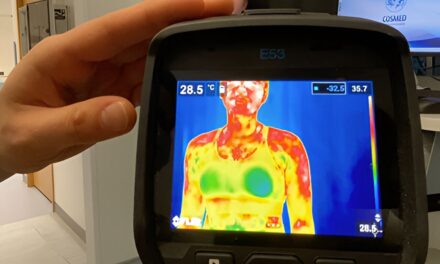

Deepfake technology, which alters real images and audio to make individuals appear to say or do things they haven’t, is becoming increasingly sophisticated. While photo and video editing tools have existed for some time, generative AI has dramatically increased the speed and realism of deepfakes.

Examples of Deepfake Health Scams:

- In December 2024, Diabetes Victoria raised alarm over deepfake videos featuring experts from The Baker Heart and Diabetes Institute promoting a diabetes supplement. Both organizations clarified that the videos were fake and that they did not endorse the supplement.

- In April 2024, scammers used deepfake images of Dr. Karl Kruszelnicki to sell pills on Facebook.

- In 2023, TikTok Shop faced scrutiny for sellers manipulating doctors’ legitimate TikTok videos to falsely endorse products, garnering millions of views.

How to Spot Deepfakes:

Australia’s eSafety Commissioner recommends the following:

- Consider the context: Does the content seem plausible for the person and setting?

- Look and listen carefully for:

- Blurring, cropped effects, or pixelation.

- Skin inconsistency or discoloration.

- Video glitches, and lighting or background changes.

- Audio problems, such as badly synced sound.

- Irregular blinking or unnatural movement.

- Content gaps in the storyline or speech.

Staying Safe:

- If your own images or voice have been altered, contact the eSafety Commissioner.

- Verify endorsements by contacting the person featured.

- Leave public comments to question the veracity of claims.

- Use platform reporting tools to flag fake content.

- Encourage others to be critical and seek advice from qualified health-care providers.

- Consult with doctors, pharmacists and other qualified health-care professionals.

- The 2025 Online Safety Review recommends Australia adopts duty of care legislation to address harms from “instruction or promotion of harmful practices.”

As AI technology advances, government intervention is crucial to protect Australians from the dangers of deepfake health scams.

Disclaimer: This news article is based on the provided information and should not be considered medical or legal advice. Always consult with qualified professionals for health and legal matters. The rapidly evolving nature of AI and online information means that new scams and methods may emerge. Stay informed and vigilant.