Tokyo, Japan – Researchers from the International Research Center for Neurointelligence (IRCN) at The University of Tokyo and Tohoku University have uncovered new insights into how the brain maintains and updates information using tree-structured representations, a framework widely used to model reasoning, problem-solving, and language processing.

In a recent study published in the Proceedings of the National Academy of Sciences, the researchers trained monkeys to perform a group reversal task requiring them to retain an associative rule while integrating sensory cue information. By recording neural activity in the prefrontal cortex (PFC), the study aimed to shed light on the mechanisms that enable stability in cognitive control while allowing for necessary updates.

Stability and Integration in Neural Dynamics

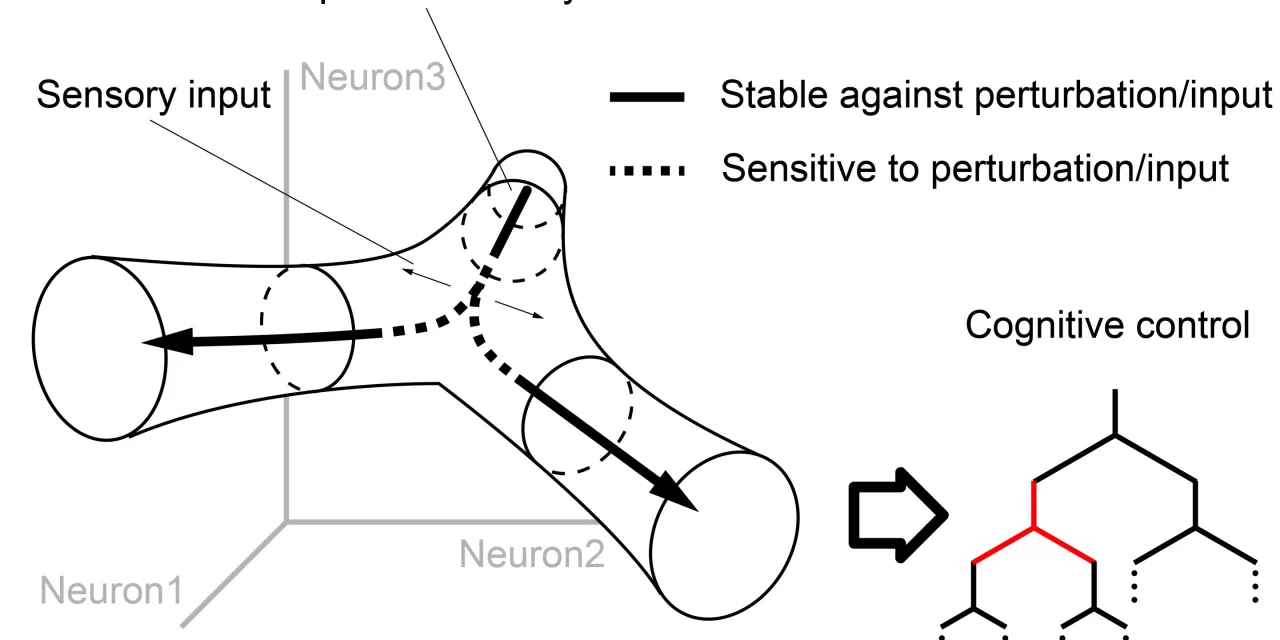

The study confirmed previous findings that the brain maintains rules through a stable state in neural dynamics. This stability ensures that when a perturbation occurs, the system can return to its original state—akin to a ball settling at the bottom of a bowl.

However, when a sensory cue was presented, it induced transient neural activity, allowing information from the cue to be integrated into the brain’s decision-making process. Notably, the researchers observed a 70-millisecond delay between the start of transient activity and the actual integration process. This delay puzzled researchers, as it could not be attributed to slow synaptic transmission.

The Role of Delay in Cognitive Flexibility

Lead author Muyuan Xu explains that maintaining and updating information involves fundamentally opposing requirements. While stability is needed to retain information, sensitivity (or controlled instability) is essential for integrating new inputs. The observed delay appears to serve as a mechanism that temporarily destabilizes the PFC, preparing it for information integration.

To further investigate this phenomenon, the team trained a recurrent neural network (RNN) to perform an analogous task. Analysis of the RNN’s behavior revealed that the system was rapidly destabilized through the delay, allowing it to become more sensitive to incoming sensory information. This mechanism, termed a “branching channel,” represents a dynamic process where the system remains stable until it reaches a branching point, at which it becomes unstable along a specific dimension, allowing new information to be efficiently incorporated.

Implications for Neuroscience and Artificial Intelligence

This research offers a new perspective on cognitive control and structured representations in neural networks. The findings suggest that neural networks can not only employ rule-like structures but can also learn them dynamically.

The study also contributes to the ongoing debate between symbolic and connectionist paradigms in artificial intelligence (AI). While symbolic AI relies on structured rules, connectionist AI (such as neural networks) traditionally learns through pattern recognition. This new evidence suggests that neural networks might bridge the gap between these two paradigms, enhancing their ability to model higher cognitive functions.

“This work advances our understanding of how the brain dynamically balances stability and flexibility, a fundamental aspect of intelligence,” Xu added.

The research has broad implications for both neuroscience and AI, potentially leading to advancements in understanding cognitive disorders and improving machine learning models.

Reference

Muyuan Xu et al., Dynamic tuning of neural stability for cognitive control, Proceedings of the National Academy of Sciences (2024). DOI: 10.1073/pnas.2409487121

Disclaimer: This article is based on research findings published in a peer-reviewed journal. The interpretations and implications discussed are for informational purposes only and do not constitute medical or scientific consensus.