In a groundbreaking study, researchers have unveiled that brain activity associated with specific words is mirrored between a speaker and listener during a conversation. This finding illuminates the concept of “brain-to-brain coupling,” a phenomenon where the brain activities of two people synchronize as they interact. Previously, it was unclear to what extent this synchronization was due to linguistic information versus other factors such as body language or tone of voice.

Published on August 2 in the journal Neuron, the study reveals that brain-to-brain coupling during conversation can be accurately modeled by considering the words used and their contextual meanings.

“We can see linguistic content emerge word-by-word in the speaker’s brain before they actually articulate what they’re trying to say, and the same linguistic content rapidly reemerges in the listener’s brain after they hear it,” explains Zaid Zada, a neuroscientist at Princeton University and the study’s first author.

Verbal communication relies on shared definitions of words, which can vary with context. For instance, the word “cold” could refer to temperature, a personality trait, or a respiratory infection, depending on the context.

“The contextual meaning of words as they occur in a particular sentence, or in a particular conversation, is really important for the way that we understand each other,” says Samuel Nastase, co-senior author and neuroscientist at Princeton University. “We wanted to test the importance of context in aligning brain activity between speaker and listener to try to quantify what is shared between brains during conversation.”

To explore this, the researchers collected brain activity data and conversation transcripts from pairs of epilepsy patients engaged in natural conversations. These patients were undergoing intracranial monitoring with electrocorticography at the New York University School of Medicine Comprehensive Epilepsy Center. This method, which involves placing electrodes directly on the brain’s surface, provides extremely high-resolution brain activity recordings compared to less invasive techniques like fMRI.

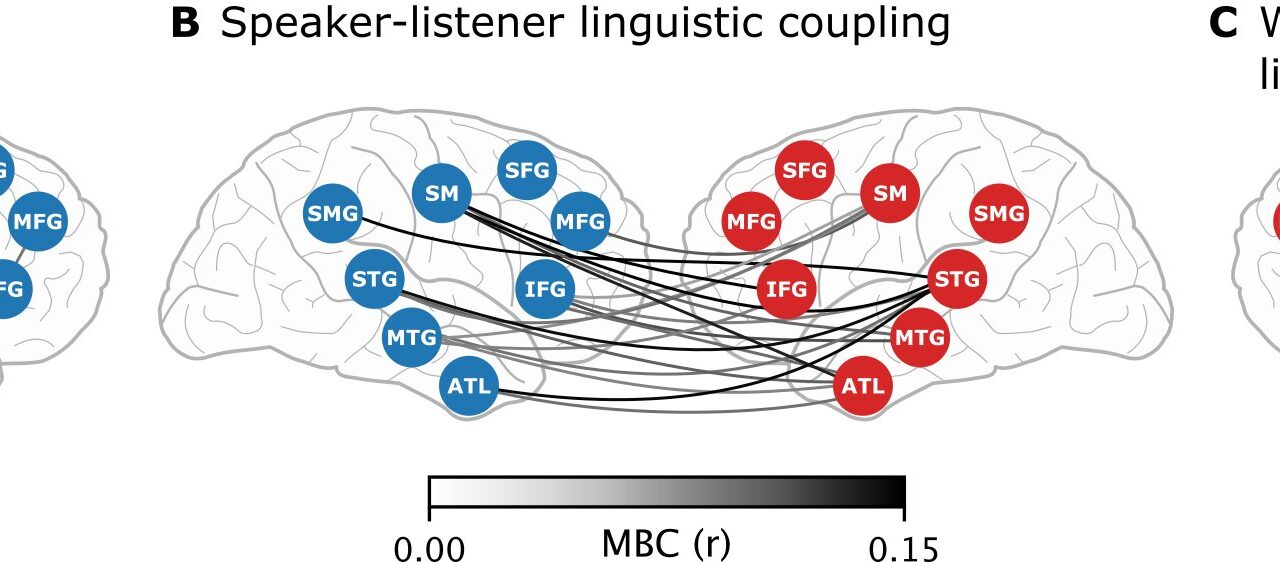

The team employed the large language model GPT-2 to extract contextual information for each word in the conversations. They then used this data to train a model that predicts how brain activity changes as information flows from speaker to listener.

The study showed that brain activity related to the context-specific meaning of words peaked in the speaker’s brain around 250 milliseconds before they spoke each word. Corresponding spikes in brain activity appeared in the listener’s brain approximately 250 milliseconds after hearing the words.

Compared to earlier studies on brain coupling, the researchers’ context-based model was more accurate in predicting shared patterns of brain activity.

“This shows just how important context is, because it best explains the brain data,” says Zada. “Large language models take all these different elements of linguistics like syntax and semantics and represent them in a single high-dimensional vector. We show that this type of unified model is able to outperform other hand-engineered models from linguistics.”

Looking ahead, the researchers plan to apply their model to other types of brain activity data, such as fMRI, to investigate how various brain regions coordinate during conversations.

“There’s a lot of exciting future work to be done looking at how different brain areas coordinate with each other at different timescales and with different kinds of content,” Nastase adds.

For further details, refer to the full study: “A shared model-based linguistic space for transmitting our thoughts from brain to brain in natural conversations,” Neuron (2024). DOI: 10.1016/j.neuron.2024.06.025. Link to full text.