A groundbreaking advancement in AI technology is set to revolutionize the way medical professionals detect cancer and diagnose abdominal conditions. An international team of researchers, led by Johns Hopkins Bloomberg Distinguished Professor Alan Yuille, has unveiled AbdomenAtlas, the largest abdominal CT dataset to date, designed to accelerate the process of annotating medical images and ultimately aid in early cancer detection.

The AI-powered initiative aims to address a major challenge in radiology: the time-consuming task of manually labeling medical images. Traditionally, radiologists have dedicated thousands of hours to identifying and labeling organs in CT scans, an endeavor that is not only labor-intensive but also prone to human error. AbdomenAtlas seeks to streamline this process using computer vision models powered by artificial intelligence (AI), enabling faster and more accurate results.

At the heart of AbdomenAtlas is a dataset featuring more than 45,000 3D CT scans of 142 annotated anatomical structures from 145 hospitals worldwide—more than 36 times larger than its closest competitor. This remarkable collection of medical data is described as a monumental leap forward in medical imaging. According to the lead author, Zongwei Zhou, an assistant research scientist in the Whiting School of Engineering’s Department of Computer Science, annotating 45,000 CT scans with 6 million anatomical shapes would have been an impossible task for a single radiologist, even if they had started in 420 BCE.

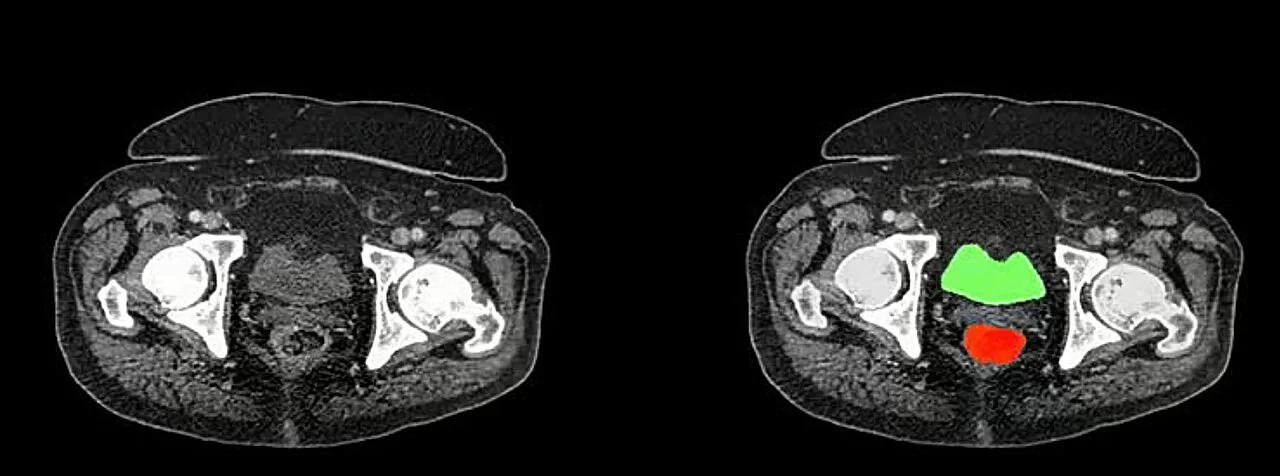

Thanks to AI algorithms, the task was completed in under two years, a feat that would have otherwise taken over two millennia. By using a combination of three AI models trained on publicly available datasets, the team was able to dramatically speed up the organ-labeling process. The AI makes predictions for unlabeled datasets, and color-coded attention maps highlight areas that need manual refinement by expert radiologists. This human-AI collaboration has achieved a tenfold speedup for tumor labeling and a remarkable 500-fold speedup for organ labeling.

This innovation has not only made it easier to annotate abdominal organs but also allowed the team to expand the dataset’s scale and precision. The ultimate goal is to train AI models that can detect cancerous tumors with greater accuracy and create digital twins of patients to enhance personalized medicine. AbdomenAtlas now serves as an invaluable resource for the global medical imaging community, allowing research teams to benchmark and improve the performance of their segmentation algorithms in real-world clinical settings.

“This project is a major milestone in AI’s potential to assist radiologists in detecting tumors and diagnosing diseases. By leveraging the data and AI models, we are making strides toward more accurate and efficient cancer detection,” said Wenxuan Li, the first author of the study and a graduate student in computer science.

In the future, the research team plans to release AbdomenAtlas to the public, offering new challenges to improve algorithmic performance in clinical environments. Furthermore, they emphasize the importance of cross-institutional collaboration to fill in the gaps, as the current dataset accounts for only a small fraction of the CT scans produced annually in the United States.

The impact of AbdomenAtlas on the medical imaging field could be profound, enabling early cancer detection, improving diagnostic accuracy, and eventually contributing to better patient outcomes across the globe.

Disclaimer: This article is based on the research paper “AbdomenAtlas: A large-scale, detailed-annotated, & multi-center dataset for efficient transfer learning and open algorithmic benchmarking” published in Medical Image Analysis (2024). The findings of this research represent the views of the authors and are not necessarily reflective of clinical practice. The dataset is still in the process of being expanded, and further validation in real-world clinical environments is ongoing.