University Hospital Bonn (UKB) and the University of Bonn demonstrate how local large language models (LLMs) can ensure privacy in radiological data processing, offering an effective alternative to commercial models.

Artificial intelligence (AI) is revolutionizing healthcare, with large language models (LLMs) being increasingly utilized to support hospitals in structuring medical data, including radiology reports. However, safeguarding patient data is a critical concern. Researchers at the University Hospital Bonn (UKB) and the University of Bonn have made a significant breakthrough in demonstrating that local LLMs can be employed for radiology report analysis without compromising data privacy. By ensuring all data remains within the hospital system, this approach offers a promising solution to protect patient information while benefiting from the power of AI.

The study, recently published in the journal Radiology, compares various LLMs for structuring radiological findings—vital for both clinicians and researchers. In particular, it highlights the advantages of using open-weights LLMs, which can be run on local hospital servers, over commercial closed-weights models that require transferring sensitive patient data to external servers.

Dr. Sebastian Nowak, first author of the study and postdoctoral researcher at UKB, explains the challenge: “Commercial closed models require data transfer to external servers, often outside the EU, which is not advisable for patient data due to privacy concerns. In contrast, open models can be run and even further trained on-site, keeping all patient information secure.”

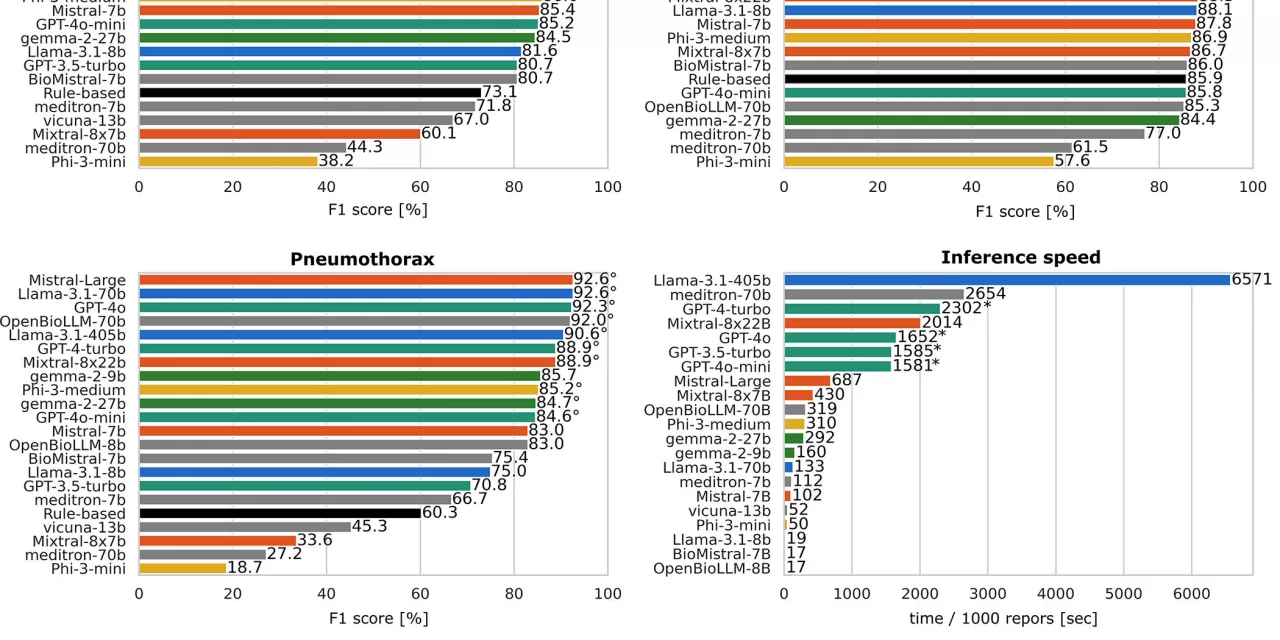

The researchers tested 17 open-weights and four closed-weights models, analyzing thousands of radiology reports. These reports were provided in both public, unprotected versions and data-protected versions from UKB. The results showed that, when used without training, larger open models performed better than smaller ones, but when trained using already-structured reports, smaller models demonstrated accuracy on par with larger ones.

Prof. Julian Luetkens, the study’s senior author and Director of Diagnostic and Interventional Radiology at UKB, emphasizes the importance of maintaining privacy: “Patient data must remain confidential, and this study proves that open LLMs can be as effective as commercial models while being developed locally in compliance with data protection laws.”

The findings suggest that the open LLMs, when trained with only a small set of structured reports, can match the performance of commercial models. Notably, during a training session with over 3,500 structured reports, the largest open model showed no significant advantage over a smaller model that was 1,200 times less complex.

“This breakthrough can unlock clinical databases for extensive epidemiological research and studies into diagnostic AI, without compromising patient privacy,” Dr. Nowak says. “Ultimately, this will enhance healthcare outcomes while adhering to strict data protection standards.”

To further support adoption, the team has made their methods and training code available under an open license, enabling other hospitals to replicate the study and integrate privacy-safe AI into their systems.

The implications of this research are vast, offering hospitals the ability to enhance clinical efficiency, foster research, and improve diagnostic accuracy—all while protecting the privacy of patients.

For further details, see the full study in the journal Radiology: Alois M. Sprinkart et al, “Privacy-ensuring Open-weights Large Language Models Are Competitive with Closed-weights GPT-4 in Extracting Chest Radiography Findings from Free-Text Reports,” Radiology (2025). DOI: 10.1148/radiol.240895.

GitHub Repository: github.com/ukb-rad-cfqiai/LLM_…ort_info_extraction/