January 9, 2025 – Stanford, CA – In a groundbreaking advance for oncology, researchers at Stanford Medicine have developed an artificial intelligence (AI) model capable of predicting cancer prognoses and identifying the most effective treatments by analyzing a combination of medical images and text data. The new model, named MUSK (Multimodal Transformer with Unified Mask Modeling), is poised to reshape the way physicians approach patient care, moving beyond diagnostics to more precise and personalized treatment decisions.

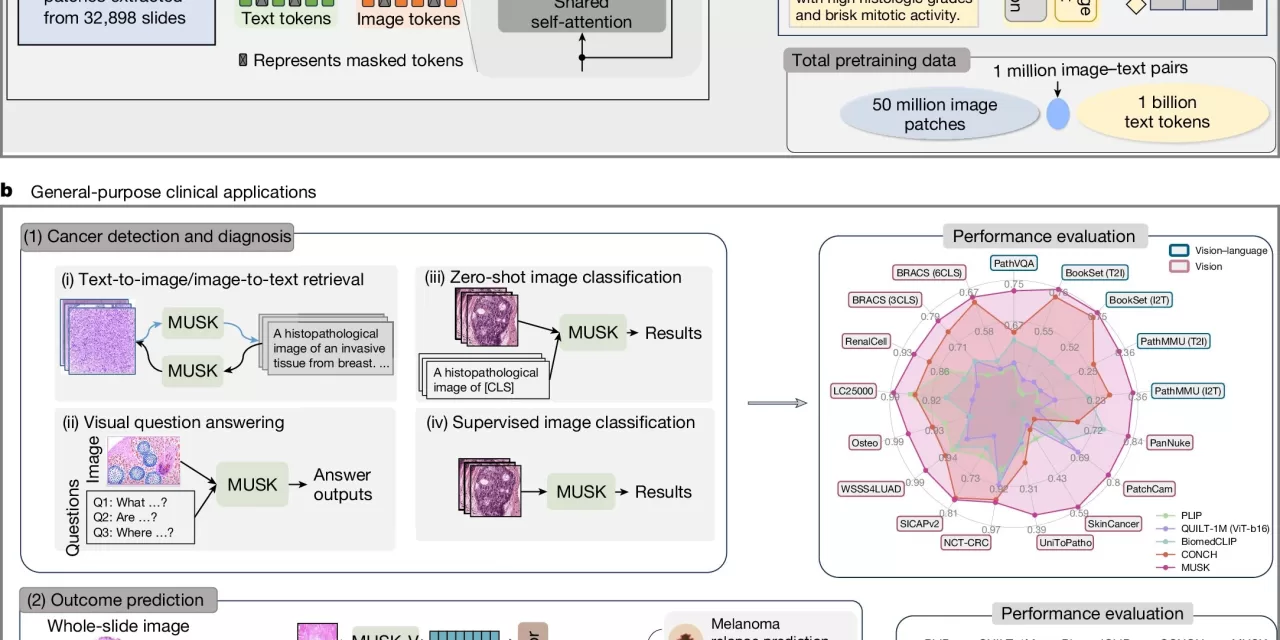

Traditionally, AI has been primarily used in medical practice for diagnostic purposes, such as identifying cancerous anomalies in X-ray images, CT scans, and tissue samples. However, predicting a patient’s long-term outcome and determining the most effective treatment requires far more complex data integration. To address this challenge, MUSK incorporates both visual data (e.g., pathology slides, CT and MRI scans) and textual data (such as physician notes and patient medical histories) into a unified system.

Developed by a team led by Dr. Ruijiang Li, an associate professor of radiation oncology at Stanford Medicine, the AI model was trained on an impressive dataset of 50 million medical images and over 1 billion pieces of pathology-related text. The results were promising: MUSK outperformed conventional methods in predicting patient prognoses for a variety of cancers, including lung, gastroesophageal, and melanoma cancers, and could accurately identify which patients would benefit most from immunotherapy.

One of the standout capabilities of MUSK is its ability to process large volumes of “unpaired” multimodal data—combining information from different sources without the need for meticulous labeling or pairing. By incorporating both image-based and text-based data into its training, the model is able to make more accurate predictions about patient outcomes, far surpassing traditional clinical methods based on cancer staging or genetic markers alone.

“MUSK can accurately predict the prognosis of individuals with many different types and stages of cancer,” said Dr. Li. “We designed it to reflect real-world clinical decision-making, where physicians rarely rely on a single data type. By combining visual and text-based information, we can offer a deeper, more holistic understanding of a patient’s condition.”

In clinical trials, MUSK proved to be highly effective in several key areas:

- It correctly predicted disease-specific survival rates 75% of the time, significantly outperforming traditional methods (64% accuracy).

- In lung cancer patients, MUSK correctly identified those likely to benefit from immunotherapy 77% of the time, compared to the standard biomarker-based method, which had an accuracy rate of just 61%.

- For melanoma, MUSK was able to predict the likelihood of recurrence within five years with 83% accuracy, providing a notable improvement over other existing AI models.

The versatility and accuracy of MUSK offer a major leap forward in personalized oncology care. By incorporating diverse data sources like imaging, clinical histories, and laboratory results, MUSK provides a far more comprehensive approach to predicting patient outcomes and tailoring treatments.

This breakthrough comes at a time when AI is beginning to be integrated more widely into healthcare settings. However, MUSK’s ability to merge different data types presents an unprecedented opportunity to refine decision-making processes in cancer treatment and prognosis. According to Dr. Li, the development of foundation models like MUSK could ultimately allow healthcare providers to offer more targeted, effective therapies.

“This is a significant step toward fulfilling the unmet clinical need for AI models that can guide treatment decisions,” Dr. Li explained. “With MUSK, physicians can gain a more nuanced understanding of which treatment plan will be the most effective for each patient, improving both outcomes and efficiency.”

The team’s findings were published in the prestigious journal Nature, marking the latest in a series of AI-driven advancements in the field of medicine.

For more information, refer to the study: A Vision–Language Foundation Model for Precision Oncology, Nature (2025). DOI: 10.1038/s41586-024-08378-w.